Building to deploy docker images using Gitlab CI is a fairly straight forward process to setup and start managing in a few easy steps using a gitlab-ci.yml file in any repo with a Dockerfile.

Gitlab suggests built in methods using the gitlab runner to build docker images using a Docker-in-docker process (dind) to manage building and deploying images to the artifact registry provided.

Official documentation: Use Docker to build Docker images

As a project's complexity gradually increases, this adds additional job steps to the Gitlab CI pipeline activity and ultimately slows down building to deploy times.

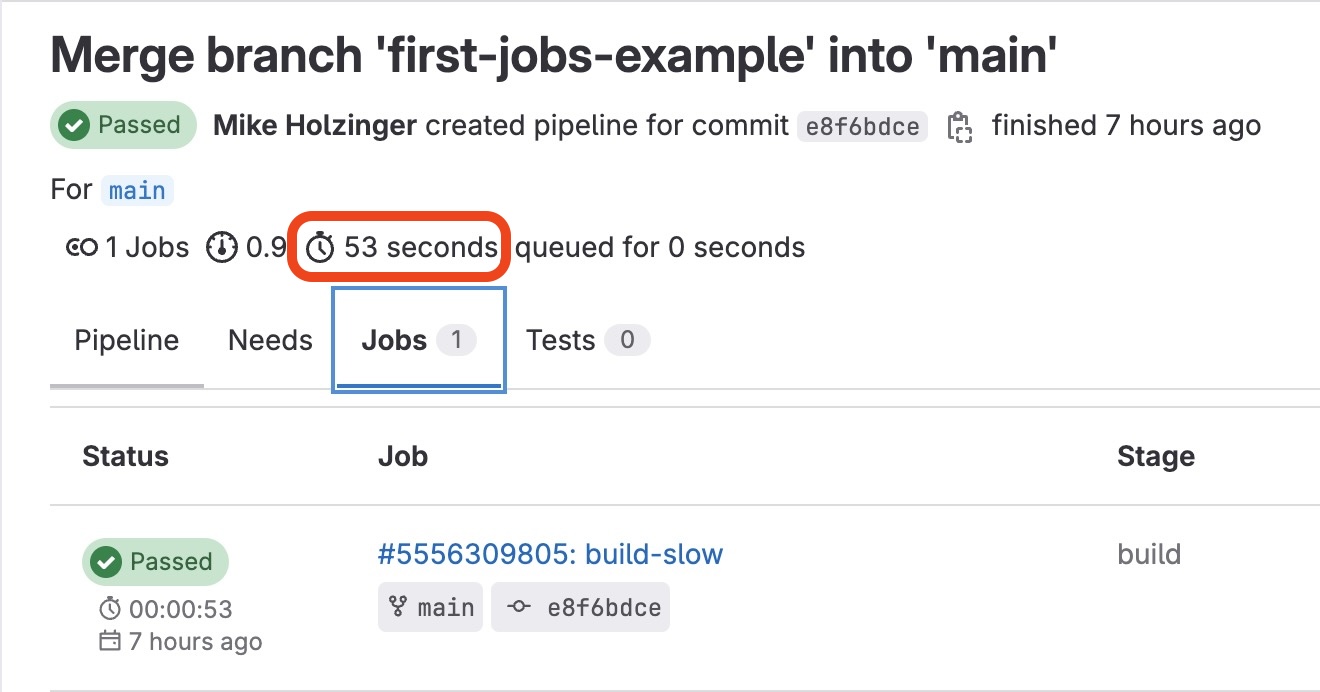

] Our first pipeline commit job

An example below is using a docker image we will call pythonapp-flask being built using dind, first then repeating the same steps using a build using kaniko.

build:

stage: build

image: docker:stable

services:

- docker:dind

variables:

DOCKER_HOST: tcp://docker:2375

DOCKER_DRIVER: overlay2

IMAGE_NAME: registry.gitlab.com/${CI_PROJECT_PATH}/pythonapp-flask

before_script:

- docker login -u $CI_REGISTRY_USER -p $CI_REGISTRY_PASSWORD $CI_REGISTRY

script:

- docker build -t $IMAGE_NAME .

- docker push $IMAGE_NAME

In our first example, we can use the documentation provided steps to build our container and push to our container registery with a given name pythonapp-flask for our project.

Our source code for the project is here:

- containerbuilding

A project job history can be tracked at:

- containerbuilding/-/pipelines

] Speeding things up

While the above example only took 52 seconds on average, we can trim even that process down to less than half the time by replacing our docker job with Kaniko

Our replaced job logic for our gitlab pipeline uses the kaniko executor project, which has a remarkable extensibility for replacing multiple Docker steps with one stage using the kaniko executor.

Step one ]

Our first step with kaniko involves creating a docker config.json file to authenticate to our container registry, the same as we would using a docker login command. For our example, we are writing the config.json step using bash redirection and storing in our /kaniko/.docker/ path.

echo \

"{\"auths\":{\"$CI_REGISTRY\":{\"username\":\"$CI_REGISTRY_USER\",\"password\":\"$CI_REGISTRY_PASSWORD\"}}}" \

> /kaniko/.docker/config.json

We call this step in the before_script step in our build stage, and by creating the file using our keys in a local step on the runner in the setup stage, we are skipping an expensive login step which would have added time by calling to the container artifact registry and then writing out a file.

Step two ]

The next next step, script: is invoking a call to kaniko/executor with a Dockerfile and the container registry and our commit tag as the tag for for the Docker image we build and push.

We are replacing two previous docker steps, docker build and docker push with one command.

stages:

- build

build:

stage: build

image:

name: gcr.io/kaniko-project/executor:debug

entrypoint: [""]

variables:

DOCKER_CONFIG: /kaniko/.docker/

before_script:

- echo "{\"auths\":{\"$CI_REGISTRY\":{\"username\":\"$CI_REGISTRY_USER\",\"password\":\"$CI_REGISTRY_PASSWORD\"}}}" > /kaniko/.docker/config.json

script:

- >

/kaniko/executor --context $CI_PROJECT_DIR

--dockerfile $CI_PROJECT_DIR/Dockerfile

--destination $CI_REGISTRY_IMAGE:$CI_COMMIT_REF_SLUG

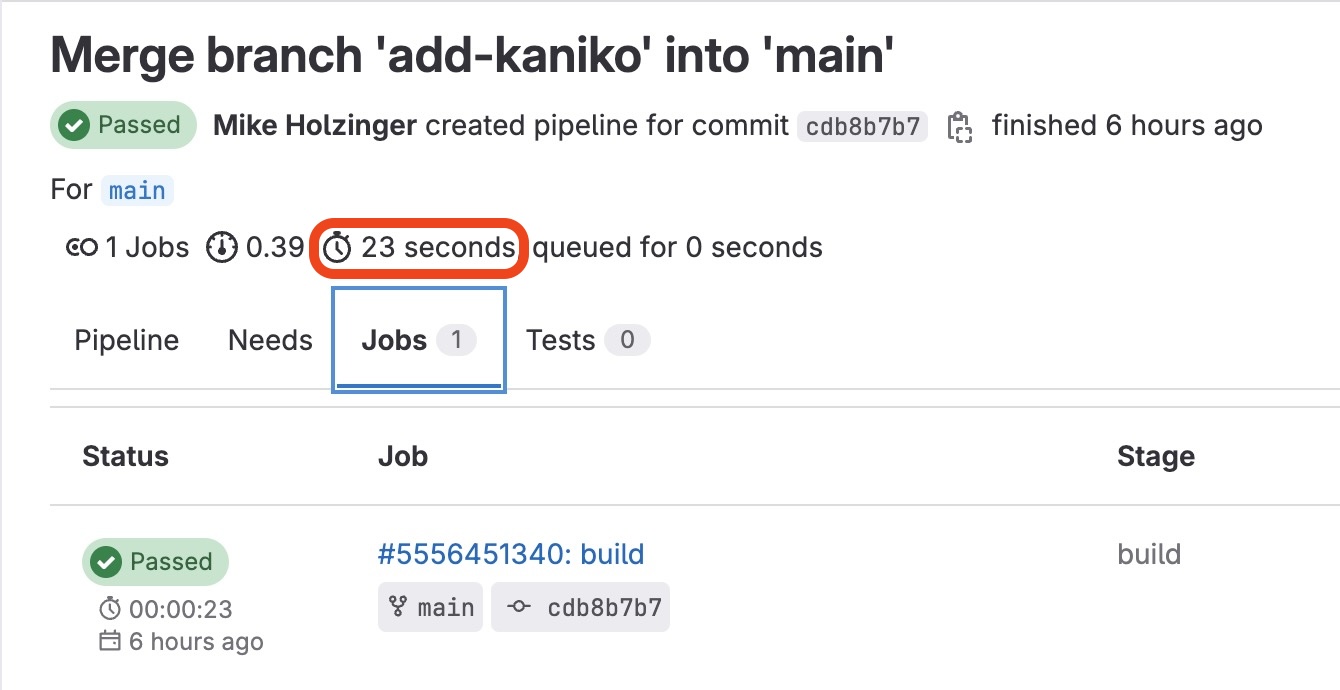

Let's see this in action.

Summary: In the above example our time to build and deploy was dropped down to just 23 seconds!

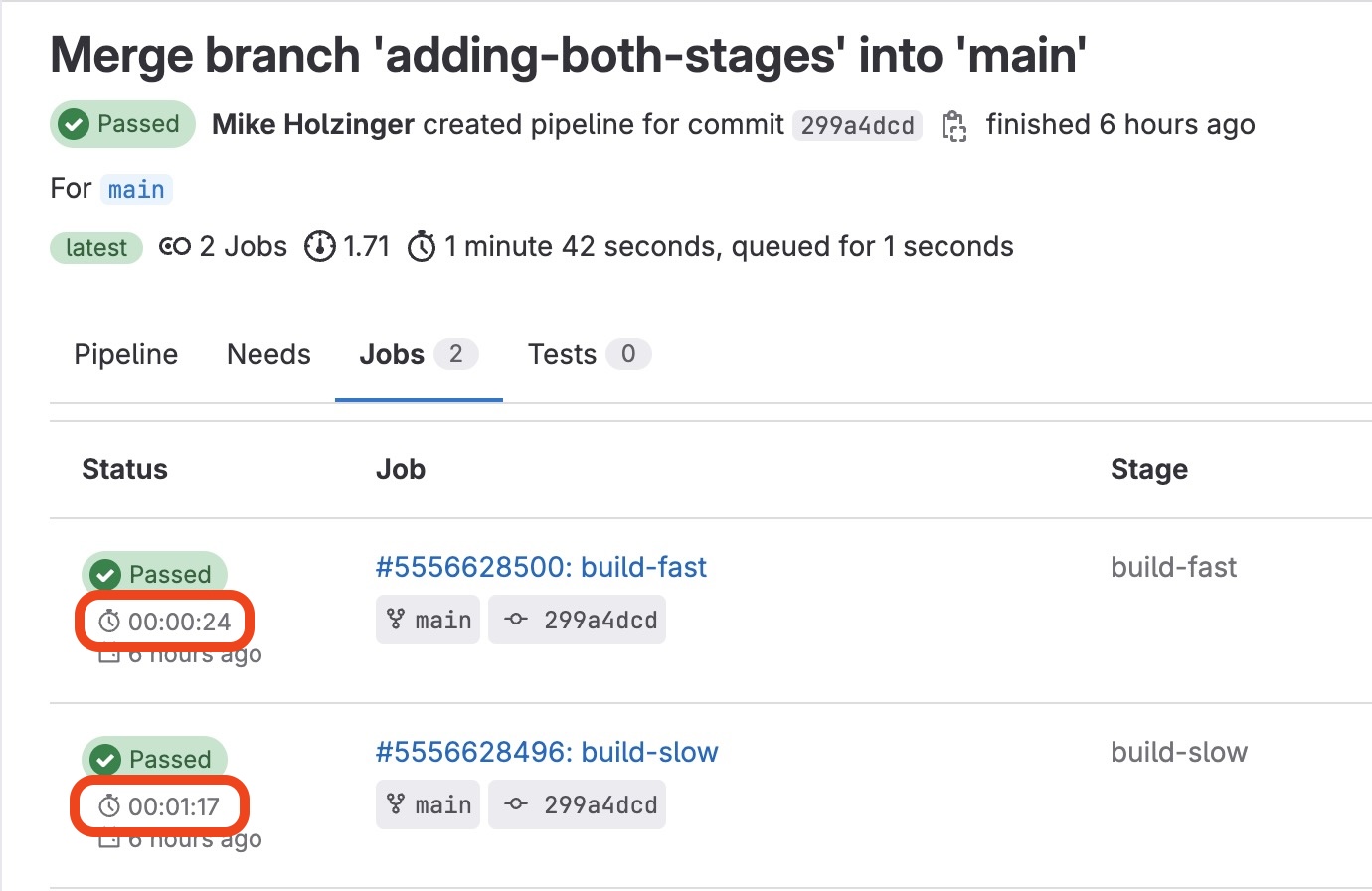

] Executing stages side by side

Finaly doing a real world test and having both steps execute side by side in the same pipeline, dind and kaniko both demostrate consistent execution times for our defined pipeline pipeline jobs, with dind taking nearly one minute and kaniko executor finishing in 24 seconds this time.

The real world benefits for removing the older dind driver model for kaniko executor speak to fast deploy times and reducing cost to one network operation from the gitlab runner to publish to the artifact registry.